Monte Carlo Solver¶

Introduction¶

Where as the density matrix formalism describes the ensemble average over many identical realizations of a quantum system, the Monte Carlo (MC), or quantum-jump approach to wave function evolution, allows for simulating an individual realization of the system dynamics. Here, the environment is continuously monitored, resulting in a series of quantum jumps in the system wave function, conditioned on the increase in information gained about the state of the system via the environmental measurements. In general, this evolution is governed by the Schrödinger equation with a non-Hermitian effective Hamiltonian

where again, the \(C_{n}\) are collapse operators, each corresponding to a separate irreversible process with rate \(\gamma_{n}\). Here, the strictly negative non-Hermitian portion of Eq. (1) gives rise to a reduction in the norm of the wave function, that to first-order in a small time \(\delta t\), is given by \(\left<\psi(t+\delta t)|\psi(t+\delta t)\right>=1-\delta p\) where

and \(\delta t\) is such that \(\delta p \ll 1\). With a probability of remaining in the state \(\left|\psi(t+\delta t)\right>\) given by \(1-\delta p\), the corresponding quantum jump probability is thus Eq. (2). If the environmental measurements register a quantum jump, say via the emission of a photon into the environment, or a change in the spin of a quantum dot, the wave function undergoes a jump into a state defined by projecting \(\left|\psi(t)\right>\) using the collapse operator \(C_{n}\) corresponding to the measurement

If more than a single collapse operator is present in Eq. (1), the probability of collapse due to the \(i\mathrm{th}\)-operator \(C_{i}\) is given by

Evaluating the MC evolution to first-order in time is quite tedious. Instead, QuTiP uses the following algorithm to simulate a single realization of a quantum system. Starting from a pure state \(\left|\psi(0)\right>\):

- I: Choose a random number \(r\) between zero and one, representing the probability that a quantum jump occurs.

- II: Integrate the Schrödinger equation, using the effective Hamiltonian (1) until a time \(\tau\) such that the norm of the wave function satisfies \(\left<\psi(\tau)\right.\left|\psi(\tau)\right>=r\), at which point a jump occurs.

- III: The resultant jump projects the system at time \(\tau\) into one of the renormalized states given by Eq. (3). The corresponding collapse operator \(C_{n}\) is chosen such that \(n\) is the smallest integer satisfying:

where the individual \(P_{n}\) are given by Eq. (4). Note that the left hand side of Eq. (5) is, by definition, normalized to unity.

- IV: Using the renormalized state from step III as the new initial condition at time \(\tau\), draw a new random number, and repeat the above procedure until the final simulation time is reached.

Monte Carlo in QuTiP¶

In QuTiP, Monte Carlo evolution is implemented with the qutip.mcsolve function. It takes nearly the same arguments as the qutip.mesolve

function for master-equation evolution, except that the initial state must be a ket vector, as oppose to a density matrix, and there is an optional keyword parameter ntraj that defines the number of stochastic trajectories to be simulated. By default, ntraj=500 indicating that 500 Monte Carlo trajectories will be performed.

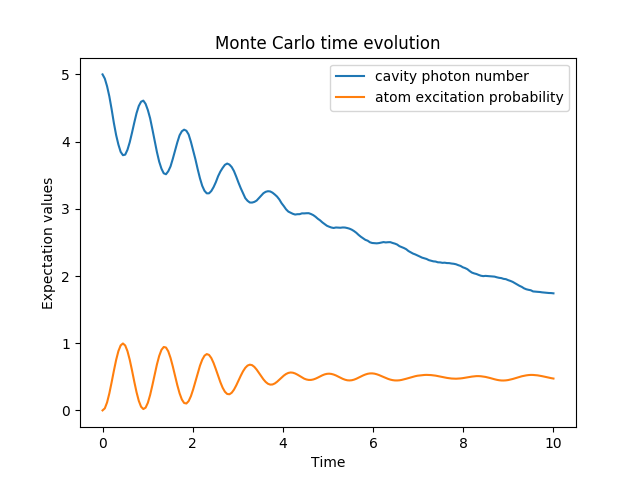

To illustrate the use of the Monte Carlo evolution of quantum systems in QuTiP, let’s again consider the case of a two-level atom coupled to a leaky cavity. The only differences to the master-equation treatment is that in this case we invoke the qutip.mcsolve function instead of qutip.mesolve

In [1]: times = np.linspace(0.0, 10.0, 200)

In [2]: psi0 = tensor(fock(2, 0), fock(10, 5))

In [3]: a = tensor(qeye(2), destroy(10))

In [4]: sm = tensor(destroy(2), qeye(10))

In [5]: H = 2 * np.pi * a.dag() * a + 2 * np.pi * sm.dag() * sm + 2 * np.pi * 0.25 * (sm * a.dag() + sm.dag() * a)

In [6]: data = mcsolve(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm])

10.0%. Run time: 0.80s. Est. time left: 00:00:00:07

20.0%. Run time: 1.52s. Est. time left: 00:00:00:06

30.0%. Run time: 2.22s. Est. time left: 00:00:00:05

40.0%. Run time: 2.99s. Est. time left: 00:00:00:04

50.0%. Run time: 3.75s. Est. time left: 00:00:00:03

60.0%. Run time: 4.53s. Est. time left: 00:00:00:03

70.0%. Run time: 5.25s. Est. time left: 00:00:00:02

80.0%. Run time: 6.01s. Est. time left: 00:00:00:01

90.0%. Run time: 6.78s. Est. time left: 00:00:00:00

100.0%. Run time: 7.49s. Est. time left: 00:00:00:00

Total run time: 7.53s

In [7]: figure()

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[7]: <matplotlib.figure.Figure at 0x2b65c3928ac8>

In [8]: plot(times, data.expect[0], times, data.expect[1])

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[8]:

[<matplotlib.lines.Line2D at 0x2b65d004cc18>,

<matplotlib.lines.Line2D at 0x2b65d004ce10>]

In [9]: title('Monte Carlo time evolution')

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[9]: <matplotlib.text.Text at 0x2b65d00d8898>

In [10]: xlabel('Time')

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[10]: <matplotlib.text.Text at 0x2b65c39129e8>

In [11]: ylabel('Expectation values')

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[11]: <matplotlib.text.Text at 0x2b65d00e60b8>

In [12]: legend(("cavity photon number", "atom excitation probability"))

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[12]: <matplotlib.legend.Legend at 0x2b65c3fc1860>

In [13]: show()

The advantage of the Monte Carlo method over the master equation approach is that only the state vector is required to be kept in the computers memory, as opposed to the entire density matrix. For large quantum system this becomes a significant advantage, and the Monte Carlo solver is therefore generally recommended for such systems. For example, simulating a Heisenberg spin-chain consisting of 10 spins with random parameters and initial states takes almost 7 times longer using the master equation rather than Monte Carlo approach with the default number of trajectories running on a quad-CPU machine. Furthermore, it takes about 7 times the memory as well. However, for small systems, the added overhead of averaging a large number of stochastic trajectories to obtain the open system dynamics, as well as starting the multiprocessing functionality, outweighs the benefit of the minor (in this case) memory saving. Master equation methods are therefore generally more efficient when Hilbert space sizes are on the order of a couple of hundred states or smaller.

Like the master equation solver qutip.mesolve, the Monte Carlo solver returns a qutip.solver.Result object consisting of expectation values, if the user has defined expectation value operators in the 5th argument to mcsolve, or state vectors if no expectation value operators are given. If state vectors are returned, then the qutip.solver.Result returned by qutip.mcsolve will be an array of length ntraj, with each element containing an array of ket-type qobjs with the same number of elements as times. Furthermore, the output qutip.solver.Result object will also contain a list of times at which collapse occurred, and which collapse operators did the collapse, in the col_times and col_which properties, respectively.

Changing the Number of Trajectories¶

As mentioned earlier, by default, the mcsolve function runs 500 trajectories. This value was chosen because it gives good accuracy, Monte Carlo errors scale as \(1/n\) where \(n\) is the number of trajectories, and simultaneously does not take an excessive amount of time to run. However, like many other options in QuTiP you are free to change the number of trajectories to fit your needs. If we want to run 1000 trajectories in the above example, we can simply modify the call to mcsolve like:

In [14]: data = mcsolve(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm], ntraj=1000)

10.0%. Run time: 1.17s. Est. time left: 00:00:00:10

20.0%. Run time: 2.27s. Est. time left: 00:00:00:09

30.0%. Run time: 3.37s. Est. time left: 00:00:00:07

40.0%. Run time: 4.51s. Est. time left: 00:00:00:06

50.0%. Run time: 5.65s. Est. time left: 00:00:00:05

60.0%. Run time: 6.78s. Est. time left: 00:00:00:04

70.0%. Run time: 7.93s. Est. time left: 00:00:00:03

80.0%. Run time: 9.06s. Est. time left: 00:00:00:02

90.0%. Run time: 10.19s. Est. time left: 00:00:00:01

100.0%. Run time: 11.29s. Est. time left: 00:00:00:00

Total run time: 11.34s

where we have added the keyword argument ntraj=1000 at the end of the inputs. Now, the Monte Carlo solver will calculate expectation values for both operators, a.dag() * a, sm.dag() * sm averaging over 1000 trajectories. Sometimes one is also interested in seeing how the Monte Carlo trajectories converge to the master equation solution by calculating expectation values over a range of trajectory numbers. If, for example, we want to average over 1, 10, 100, and 1000 trajectories, then we can input this into the solver using:

In [15]: ntraj = [1, 10, 100, 1000]

Keep in mind that the input list must be in ascending order since the total number of trajectories run by mcsolve will be calculated using the last element of ntraj. In this case, we need to use an extra index when getting the expectation values from the qutip.solver.Result object returned by mcsolve. In the above example using:

In [16]: data = mcsolve(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm], ntraj=[1, 10, 100, 1000])

10.0%. Run time: 1.16s. Est. time left: 00:00:00:10

20.0%. Run time: 2.28s. Est. time left: 00:00:00:09

30.0%. Run time: 3.39s. Est. time left: 00:00:00:07

40.0%. Run time: 4.48s. Est. time left: 00:00:00:06

50.0%. Run time: 5.62s. Est. time left: 00:00:00:05

60.0%. Run time: 6.73s. Est. time left: 00:00:00:04

70.0%. Run time: 8.04s. Est. time left: 00:00:00:03

80.0%. Run time: 9.34s. Est. time left: 00:00:00:02

90.0%. Run time: 11.02s. Est. time left: 00:00:00:01

100.0%. Run time: 12.62s. Est. time left: 00:00:00:00

Total run time: 12.70s

we can extract the relevant expectation values using:

In [17]: expt10 = data.expect[1] # <- expectation values avg. over 10 trajectories

In [18]: expt100 = data.expect[2] # <- expectation values avg. over 100 trajectories

In [19]: expt1000 = data.expect[3] # <- expectation values avg. over 1000 trajectories

The Monte Carlo solver also has many available options that can be set using the qutip.solver.Options class as discussed in Setting Options for the Dynamics Solvers.

Reusing Hamiltonian Data¶

Note

This section covers a specialized topic and may be skipped if you are new to QuTiP.

In order to solve a given simulation as fast as possible, the solvers in QuTiP take the given input operators and break them down into simpler components before passing them on to the ODE solvers. Although these operations are reasonably fast, the time spent organizing data can become appreciable when repeatedly solving a system over, for example, many different initial conditions. In cases such as this, the Hamiltonian and other operators may be reused after the initial configuration, thus speeding up calculations. Note that, unless you are planning to reuse the data many times, this functionality will not be very useful.

To turn on the “reuse” functionality we must set the rhs_reuse=True flag in the qutip.solver.Options:

In [20]: options = Options(rhs_reuse=True)

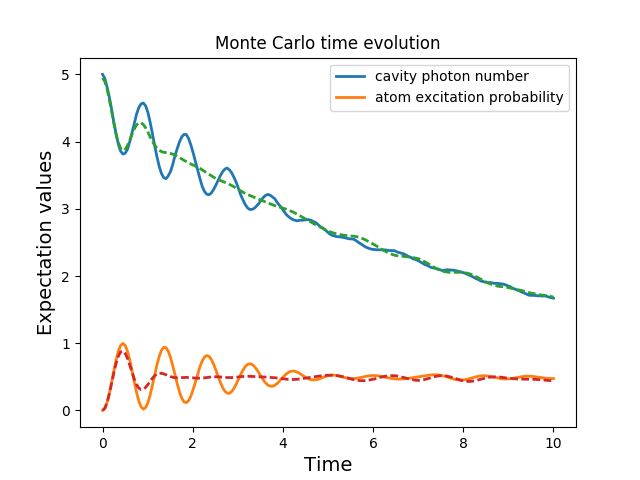

A full account of this feature is given in Setting Options for the Dynamics Solvers. Using the previous example, we will calculate the dynamics for two different initial states, with the Hamiltonian data being reused on the second call

In [21]: psi0 = tensor(fock(2, 0), fock(10, 5))

In [22]: a = tensor(qeye(2), destroy(10))

In [23]: sm = tensor(destroy(2), qeye(10))

In [24]: H = 2 * np.pi * a.dag() * a + 2 * np.pi * sm.dag() * sm + \

....: 2 * np.pi * 0.25 * (sm * a.dag() + sm.dag() * a)

....:

In [25]: data1 = mcsolve(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm])

10.0%. Run time: 0.62s. Est. time left: 00:00:00:05

20.0%. Run time: 1.17s. Est. time left: 00:00:00:04

30.0%. Run time: 1.75s. Est. time left: 00:00:00:04

40.0%. Run time: 2.32s. Est. time left: 00:00:00:03

50.0%. Run time: 2.89s. Est. time left: 00:00:00:02

60.0%. Run time: 3.43s. Est. time left: 00:00:00:02

70.0%. Run time: 3.99s. Est. time left: 00:00:00:01

80.0%. Run time: 4.55s. Est. time left: 00:00:00:01

90.0%. Run time: 5.10s. Est. time left: 00:00:00:00

100.0%. Run time: 5.67s. Est. time left: 00:00:00:00

Total run time: 5.74s

In [26]: psi1 = tensor(fock(2, 0), coherent(10, 2 - 1j))

In [27]: opts = Options(rhs_reuse=True) # Run a second time, reusing RHS

In [28]: data2 = mcsolve(H, psi1, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm], options=opts)

10.0%. Run time: 1.07s. Est. time left: 00:00:00:09

20.0%. Run time: 2.08s. Est. time left: 00:00:00:08

30.0%. Run time: 3.12s. Est. time left: 00:00:00:07

40.0%. Run time: 4.16s. Est. time left: 00:00:00:06

50.0%. Run time: 5.14s. Est. time left: 00:00:00:05

60.0%. Run time: 6.23s. Est. time left: 00:00:00:04

70.0%. Run time: 7.44s. Est. time left: 00:00:00:03

80.0%. Run time: 8.49s. Est. time left: 00:00:00:02

90.0%. Run time: 9.49s. Est. time left: 00:00:00:01

100.0%. Run time: 10.56s. Est. time left: 00:00:00:00

Total run time: 10.64s

In [29]: figure()

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[29]: <matplotlib.figure.Figure at 0x2b65c2d9c240>

In [30]: plot(times, data1.expect[0], times, data1.expect[1], lw=2)

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[30]:

[<matplotlib.lines.Line2D at 0x2b65c2aad908>,

<matplotlib.lines.Line2D at 0x2b65c2aad0b8>]

In [31]: plot(times, data2.expect[0], '--', times, data2.expect[1], '--', lw=2)

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[31]:

[<matplotlib.lines.Line2D at 0x2b65c25d4d68>,

<matplotlib.lines.Line2D at 0x2b65c25d4e48>]

In [32]: title('Monte Carlo time evolution')

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[32]: <matplotlib.text.Text at 0x2b65c2a1f208>

In [33]: xlabel('Time', fontsize=14)

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[33]: <matplotlib.text.Text at 0x2b65c261ea90>

In [34]: ylabel('Expectation values', fontsize=14)

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[34]: <matplotlib.text.Text at 0x2b65c2963ac8>

In [35]: legend(("cavity photon number", "atom excitation probability"))

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������Out[35]: <matplotlib.legend.Legend at 0x2b65c2d9ca20>

In [36]: show()

In addition to the initial state, one may reuse the Hamiltonian data when changing the number of trajectories ntraj or simulation times times. The reusing of Hamiltonian data is also supported for time-dependent Hamiltonians. See Solving Problems with Time-dependent Hamiltonians for further details.

Fortran Based Monte Carlo Solver¶

Note

In order to use the Fortran Monte Carlo solver, you must have the blas development libraries, and installed QuTiP using the flag: --with-f90mc.

In performing time-independent Monte Carlo simulations with QuTiP, systems with small Hilbert spaces suffer from poor performance as the ODE solver must exit the ODE solver at each time step and check for the state vector norm. To correct this, QuTiP now includes an optional Fortran based Monte Carlo solver that has enhanced performance for systems with small Hilbert space dimensionality. Using the Fortran based solver is extremely simple; one just needs to replace mcsolve with mcsolve_f90. For example, from our previous demonstration

In [37]: data1 = mcsolve_f90(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm])

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-37-f664f76fd9fd> in <module>()

----> 1 data1 = mcsolve_f90(H, psi0, times, [np.sqrt(0.1) * a], [a.dag() * a, sm.dag() * sm])

NameError: name 'mcsolve_f90' is not defined

In using the Fortran solver, there are a few limitations that must be kept in mind. First, this solver only works for time-independent systems. Second, you can not pass a list of trajectories to ntraj.